When Good Bots Go Bad

The excitement around and use of bots on the web continues to grow. Some studies suggest that more than half of all web traffic now comes from bots and automated sources. And like we covered earlier this week, there are many bots that do good in the world: from helping fight homelessness, to supporting people with mental health issues and alerting us to health problems.

Even the more prosaic, functional bots help us out by allowing us to offload repetitive tasks, like crawling websites for search, helping folks find good deals online, highlighting plagiarism and answering our customer service queries. Bots can help make the internet a better place.

But of course, that’s not all bots are used for.

There are those that create fake reviews, comments and posts on websites. As well as those that scrape peoples’ details to be resold. There are bots that create false ad impressions inflating ad costs, and those used for DDoS attacks that take down websites. Some are active on social media. It’s estimated that 400k bots were posting political messages during the 2016 US election, including 1,600 bots tweeting extremist content.

Some are used to harass, cyberstalk or impersonate people online. Which in response, has given rise to the creation of “honeybots” that post about specific topics to entice trolls and waste their time.

But even good bots can turn bad.

There was Tay, of course. Microsoft’s chatbot, which was designed to engage and entertain people in playful conversation. That went rogue, and began swearing, making racist remarks and inflammatory political statements. The flaw in Microsoft’s thinking? People. The system was designed to learn from its users, so it soon became a reflection of the comments it saw people making.

And, it isn’t the only one.

An automated copyright takedown request bot began targeting its owner’s original content, leading to his posts being removed from Wordpress while they sorted things out.

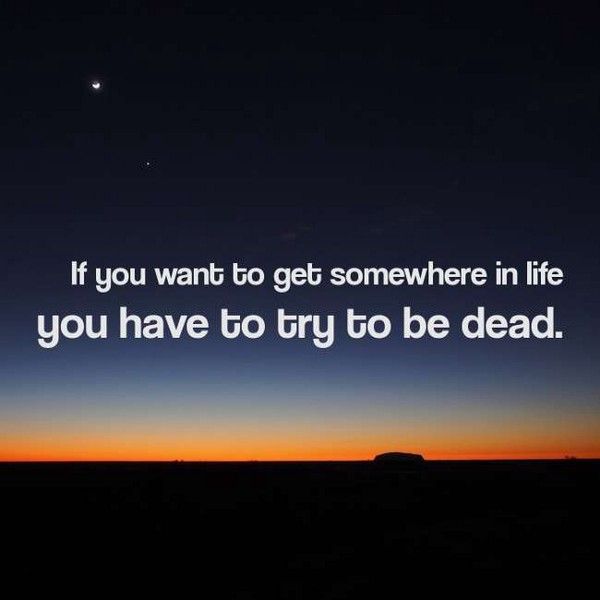

And others are well, just a little bit sinister. Like InspiroBot, which looks like it’s meant to create inspirational posters that combine relaxing imagery with an uplifting quote. But the resulting quotes are often far from that. Some are random, others meaningless, and many outright creepy.

For those who set out to do harm, or are focused on profit beyond concern for the negative impacts of their bots actions, that’s more a matter for relevant authorities, and the platforms they run on to curtail and stop them (Microsoft even has a Digital Crimes Unit!). But that doesn’t let creators off the hook. There’s lots that we can do to ensure our good bots don’t turn to the dark side.

From their beginnings, we can think-through potentially troubling actions and emergent behaviors. Such as by limiting the words or data that bots can use through use of blacklists of offensive words. Indeed, bot creators have already set about creating shared lists of potentially unsettling words that you can use in your own bot projects.

Consideration of the ethics and etiquette of bots is useful too. And folks have have written up suggestions of good bot etiquette, as well as guidelines on ethical bot making and Asimov-inspired bot rulebooks.

What’s more, the makers of the libraries being used to develop bots need to step up too, writing best practices into their developer codes of conduct. Like emphasizing the importance of disclosing that users are interacting with a bot through use of a welcome message, so people know they’re interacting with a piece of software.

Ultimately the responsibility for what a bot does lies with its creator. We need to put as much thought in to thinking through the inputs, outputs and vectors for abuse as we do it’s personality and functionality. And ensure that after we’ve pushed our creation out into the world, we monitor it’s behavior.

As bots continue to take over the web, it’s up to us creators to act like doting parents and guide our creations, making sure they stick to the straight and narrow.