Scrape the Data You Need with Cheerio!

We live in the age of the API. Data is (for better or worse) a commodity, and it's big business to offer data as a service via APIs. Even still, some useful data just isn't available online via a developer-friendly API.

Well, sort of!

Let's say there's a website with some useful data on it. Maybe it's the hours of a restaurant, a list of products, or the latest breaking news. This site doesn't have a traditional API, but then again, what is HTTP? We ask for a page, and get an HTML response. That's practically an XML API right there! We may just have a way to get that data with a program after all.

Finding information in HTML is easy when you're in a browser — the contents of an element are one document.querySelector away, and if you're using jQuery, it's as easy as $, with extra tools to boot! We are off to the races.

One more snag awaits us. Because of the web's strict same-origin policy, we (reasonably, yet irritatingly) can't just fetch the contents of another web page from our page without permission. We know that node (or even curl) for that matter would have no problem, so we can code up a little server that fetches the page for us. But node doesn't know how to parse HTML, and even if it did, where's my snazzy jQuery for slicing and dicing the results!

📦 cheerio's npm listing 📖 cheerio documentation

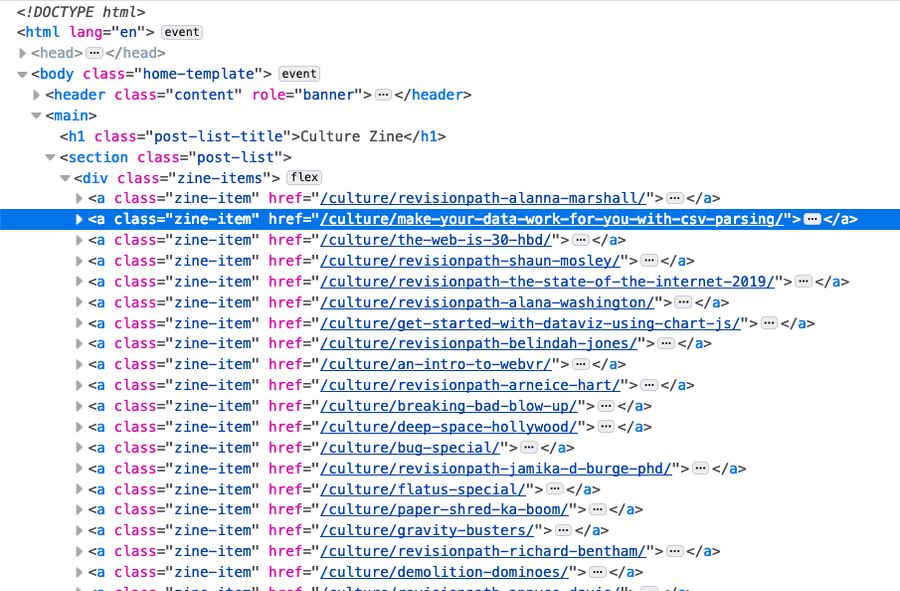

Let's for the moment pretend this site dosn't have an RSS feed (it totally does). I'd love to get a list of the latest posts for display in a widget. Okay, no RSS, but what does the HTML look like?

Clean and easy to read! I see a flat container zine-items, full of zine-item elements. A zine-item looks something like this.

We've got the URL of the post, the title of the post, and some category metadata. Now we know what we're looking for, we can deploy cheerio!

Here's the full working demo. Feel free to click around- we'll break it down below.

The Cheerio Starter App #

We're demoing this library by building a tiny app that grabs posts from the Glitch Culture Zine. The app is based on the basic express starter, with the addition of request to assist with fetching data from a URL, and of course cheerio. We're serving a minimal HTML page, with a script that fetches data from our server at the URL /glitch-culture. I'm not going to go too deep into the client side; after fetching the list of posts, we're just making some list items and populating them with data.

The core of our example is in server.js, specifically the handler for app.get('/glitch-culture'). When the client requests this URL, the following happens:

- Fetch the HTML: we use the request library to fetch the HTML from https://glitch.com/culture/, and we receive it in a callback function.

- Load the HTML into cheerio: Unlike jQuery in the browser, where $ is just available, on the server we need to tell cheerio what $ is even searching. To do this, the HTML response is fed into cheerio.load, and for familiarity's sake, the loaded document is saved to a variable named $. After this point, we can query the HTML as if it was in a jQuery-enabled page!

- Grab the list of zine-items: This is pure classic jQuery-style querying – $('.zine-item') gets us our list.

- Extract the information from each item: The title and metadata were text inside an element, so .text() gets us that. The URL comes from the href attribute on the item link, so that calls for .attr('href')

- Send the list as JSON: Express provides the handy response.json() method, which we provide with our extracted data: The resulting data looks like this:

Now What? #

The starter app uses cheerio to convert some HTML into JSON, but once you've got the data, the power is yours! Remix the app and build your own page scraper. Why not monitor prices on your favorite online store? Liberate data trapped in HTML tables! Watch for sneaky changes to your senator's Wikipedia page! Scraping is the "first API", and cheerio makes it easy.

One Last Thing #

Screen scraping is super useful, but not all website owners love the idea of people grabbing the information off their pages. It's always a good idea to check a website's terms and conditions before running any automatic scrapers against their content, to be sure you're not getting yourself on someone's naughty list. Also, remember to be polite! If you're requesting data off a website repeatedly, you could be overwhelming ther server or slowing the site down for other visitors.

Happy scraping!